Missing or skipping adequate testing can lead to risks that could endanger the success of any software project. But what exactly are the specific and practical drawbacks of poor software quality due to inadequate testing?

Naturally, with rampant digitalization everywhere, software quality is a crucial consideration, so effective testing is vital. Most software teams know this – but testing takes time and effort that could otherwise be spent delivering new features or products to the market.

This series of posts helps you to drill down into the details of just what kind of problems it can cause if software teams skip or miss tests, apply inadequate testing strategies, or, for whatever reason, fail to carry out thorough testing. For each problem that results from missing tests, we’ll provide real-life examples and a quick analysis of what went wrong. In this blog post, part 1, we’re focusing on the obvious: bugs and errors in software, through the example of the infamous healthcare.gov blunder of 2013.

Bugs and errors in poorly tested software

All developers know this: software errors can slip through to production and can be a source of headache for both developers and the organizations they work for. Sadly, without sufficient testing, there is a higher chance of bugs making it into the released product.

While we know bugs are “inevitable”, just how widespread they are isn’t very clear: sources claim that there can be anywhere from 15-50 to 100-150 errors per every thousand line of software code. Whichever is actually the case, software errors exist and cause real-life problems, and that is a fact that you likely know from experience.

Besides potentially being very annoying, bugs can also have grave risks for the end user (for instance, in the case of safety-critical software). Such catastrophic issues can result in a loss of credibility and profitability for the company, and in some cases, can even cause harm to the user or the environment.

While not catastrophic, one such example of a very much avoidable and grave software blunder was that of healthcare.gov.

The botched healthcare.gov launch

One great achievement of President Obama’s legacy was the affordable care act, which aimed to improve access to healthcare services for all Americans. Citizens were supposed to start their journeys to gaining access to healthcare on the healthcare.gov website that was launched on 1 October 2013.

The goal of this website was to help Americans compare healthcare plans, provide eligibility information on various federal subsidies, and make it possible for them to enroll. The website was intended to cover 36 states and to manage the healthcare enrollment process for all residents of those states.

However, a number of critical technical and software issues prevented most residents from accessing the services offered by healthcare.gov. In fact, a total of 6 users got to apply and select a health insurance plan the day the website was launched. Within 2 hours of launching, the website broke down.

At first, the issue was thought to be too high demand for the website – at this point, insufficient load testing may be your prime suspect. But very soon, several other software errors were identified. Redditers started to instantly tear apart the website, even finding typos in string names, which of course is an easily avoidable issue.

Overall, problems with the website led to the project’s budget growing from the initially planned $93.7M to a whopping $1.7B. But what exactly went wrong?

The problems with healthcare.gov

The project suffered from a wide variety of problems, not all of them software-related. Project management specifically has been criticized, leading to siloization in the software development organization.

However, there have been quite a few serious software issues, too, that contributed to the website breaking down. It seems that connecting the front-end website to the back-end infrastructure wasn’t successful. That, in part, resulted from the two components being developed by different companies with minimal coordination.

In some cases, dropdown menus were not complete, and the transferring of user data to insurance companies also had problems, resulting in inaccurate or incomplete data being sent around. Initially, the plan was to allow users to browse healthcare plans without logging in, but that feature was delayed and wasn’t ready for the launch. Website login was required for the initial step of gathering information. That created a bottleneck due to the aforementioned lack of sufficient load testing.

Even there, however, some more errors came up with the validation logic. Specifically, it was required that user names contain numbers – but this fact wasn’t communicated in the instructions or failure messages, leaving users completely in the dark about what exactly went wrong with their registrations.

Avoiding bugs through adequate testing

Similar issues may be prevented by implementing rigorous and thorough testing of the software during development and prior to its deployment to production. Any project benefits from a good testing strategy that is established from the start – and is also allocated necessary resources in the project’s budget.

From what we can tell from public statements about the problems with healthcare.gov, they mostly originated from different kinds of testing not having been performed at all or with too little scope. The solution, of course, is to use all relevant testing types.

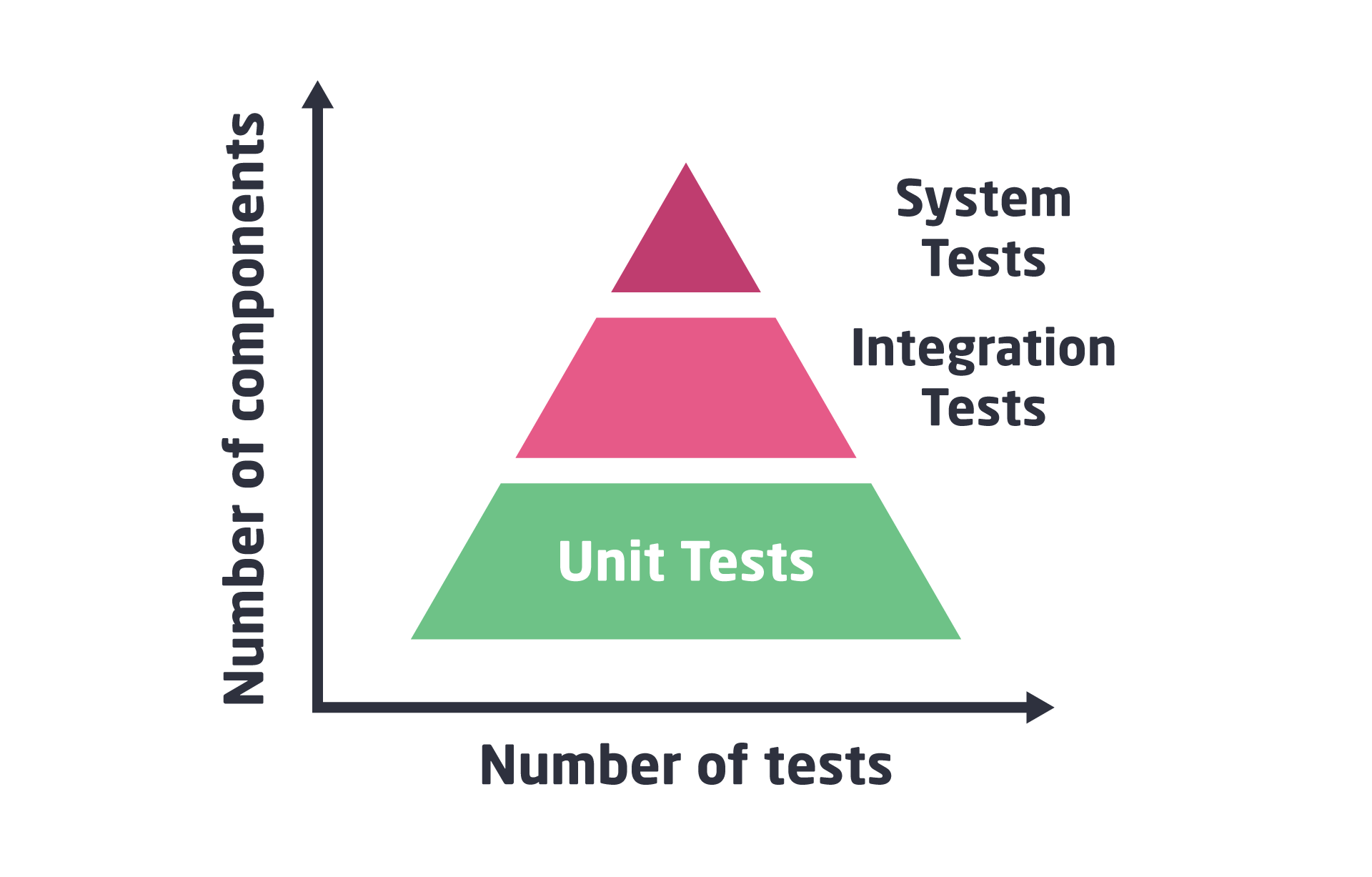

Specifically, in the case of healthcare.gov, it seems like the project would have benefited from better integration testing. Since integration tests are meant to check whether two or more software components actually work together, proper integration testing could have helped connect the front-end to the back-end. To find out which scenarios are best validated on which testing levels (unit, integration, or system testing), check out our blog post about the testing pyramid.

Symflower helps you ship high-quality code faster – check it out!

Besides integration testing, the healthcare.gov example also provides ample proof that usability testing should not be skipped. The very basic action of creating an account was confusing for users. Problems like this can occur if the application is never tested with people that were not involved in the project, and who therefore lack the bias of those that know the requirements and would only explore certain user paths. It’s always a good idea to involve users in testing who know next to nothing about the application and to observe how they interact with the software.

To help you navigate the various types of software testing available, check out our blog post about the testing jungle.

Keep in mind: it is safe to assume that something does NOT work as long as you have not tested if it is functioning. But since budget and time constraints mean that you can’t test everything, it’s a matter of analyzing risks, prioritizing areas or functionality to test, and determining the level of testing necessary. For instance, if you’re developing an application for a few hundred thousand users, load testing for millions of users is an overkill. Bear in mind the context to determine what kind (and what level) of testing you’ll need for your application – then follow that up with a thorough test strategy that builds on those findings.

Stay updated about our upcoming blog posts on software development and testing: sign up for our newsletter! Follow us on Twitter, LinkedIn or Facebook to never miss our updates.